Wild Wild Wikimedia

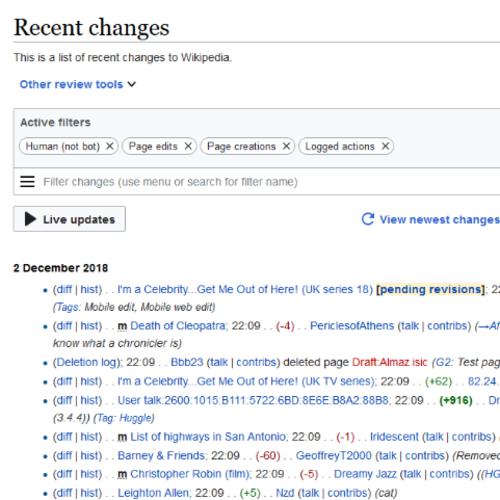

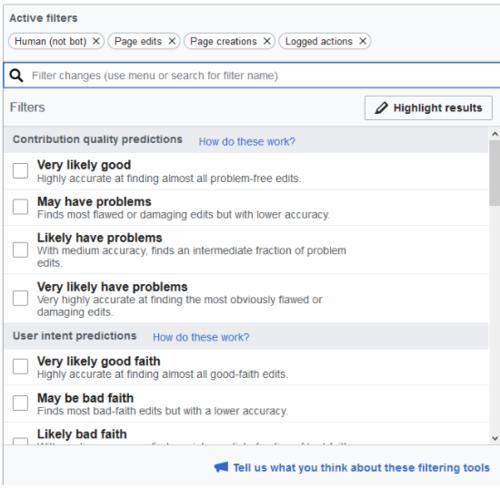

To run Wikipedia, the Wikimedia Foundation develops and maintains an artificial intelligence model to flag problematic edits made to articles. This model makes two classifications: whether the edit is damaging and whether the edit is in good or bad faith. Depending on these classifications, the model then potentially flags the edit for review.

To run Wikipedia, the Wikimedia Foundation develops and maintains an artificial intelligence model to flag problematic edits made to articles. This model makes two classifications: whether the edit is damaging and whether the edit is in good or bad faith. Depending on these classifications, the model then potentially flags the edit for review.

WildWildWikimedia contributes to this model by creating new identification features not currently employed by the model. These features are meant to identify violations to rules specified in Wikipedia’s style guidelines. Specifically, these features attempt to detect puffery, idioms and clichés, vulgarities and obscenities, and neologisms, which are then used by the model to make classifications on the edit.